Your data quality dashboard shows all green. Your pipeline just merged duplicate records and nobody noticed for a week.

Or maybe it’s the opposite. Your unit tests all pass. You deploy with confidence. Then your pipeline breaks in production because the upstream API changed a field name. Does this bring vivid memories? 😊

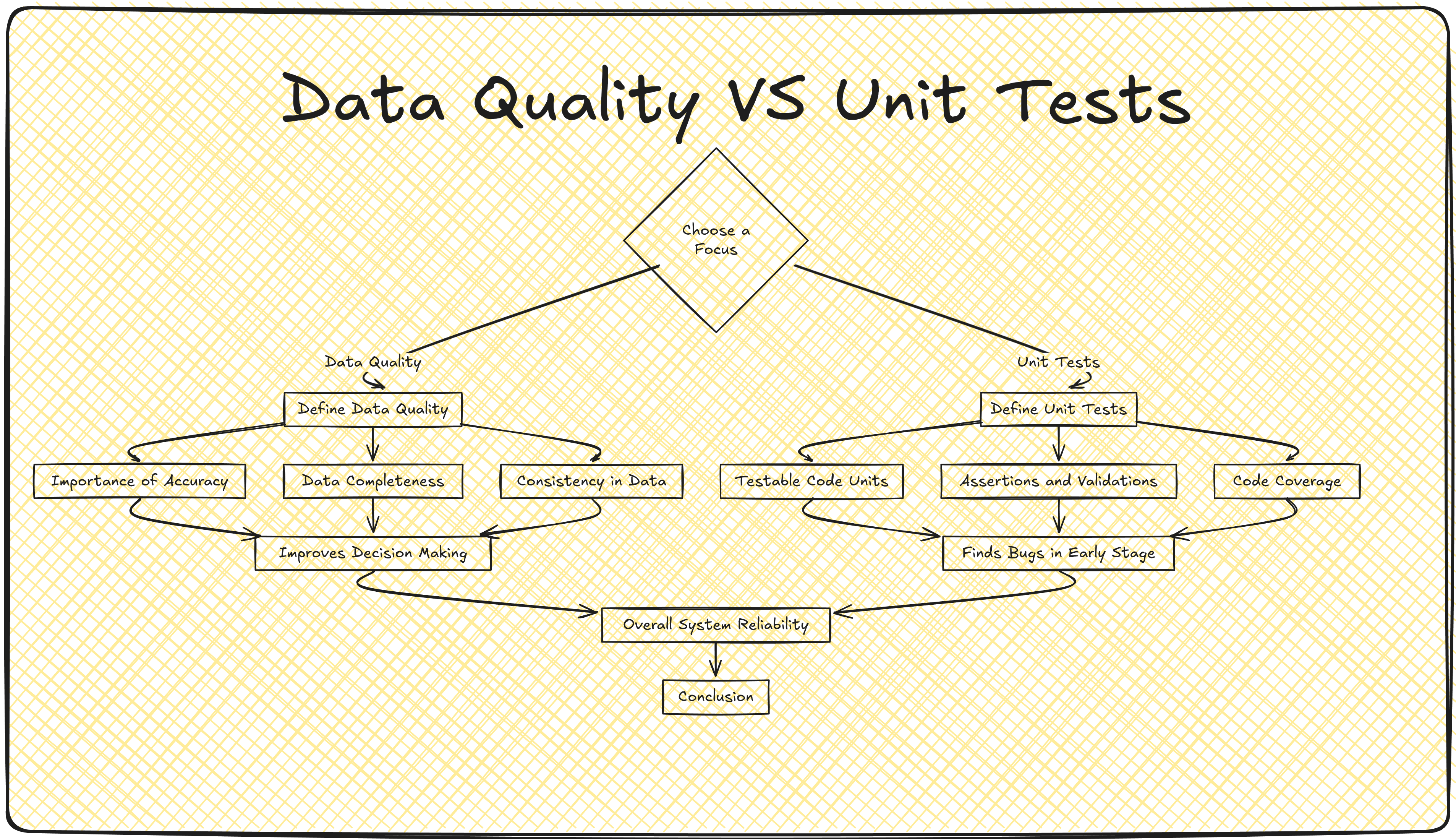

Here’s the fact: most data engineering teams either over-rely on data quality checks or confuse them with unit tests.

Both are critical, but they catch completely different problems. If you don’t mix them up, you’ll have blind spots in your testing strategy.

In this post, I’ll show you real examples where each approach fails on its own. By the end, you’ll know exactly when you need a unit test versus when you need a data quality check.

🔍 Quick Definitions (So We’re on the Same Page)

Let’s get clear on what we’re talking about.

Data quality checks validate your data. They answer questions like: Is this field null when it shouldn’t be? Are these values in the expected range? Does this schema match what we expect?

Unit tests validate your code logic. They answer: Does this function do what I think it does? Does my transformation handle edge cases correctly? Does my join logic work as intended?

Both prevent problems. But they prevent different problems.

⚠️ Real Example #1: Where Data Quality Checks Miss the Bug

The Scenario: Revenue Calculation with Returns

Let’s say you’re building a pipeline to calculate daily revenue for an e-commerce platform. Simple enough, right?

Here’s your transformation logic:

You set up your data quality checks:

- ✅ All prices are positive

- ✅ No null quantities

- ✅ Revenue values are reasonable (between $0 and $1M per day)

- ✅ No missing dates

Everything passes. Your dashboard is green. Ship it.

But here’s what you missed: some quantities are negative. These are returns. A quantity of -2 means the customer returned 2 items.

Your calculation multiplies price by quantity. So a $50 item with quantity -2 gives you -$100 in revenue. You’re subtracting revenue when you should be tracking returns separately.

The data looks totally fine to your quality checks. Prices are positive. Quantities exist (they’re just negative). Revenue totals fall within expected ranges because returns are a small percentage of orders.

But your business logic is wrong. You’re treating returns as negative revenue instead of handling them properly.

Here’s what you should have written inside your logic:

| |

A unit test would have caught this:

| |

The data passes all quality checks. But your logic is broken. That’s what unit tests catch.

⚠️ Real Example #2: Where Data Quality Checks Miss the Bug

The Scenario: Conversion Rate Calculation

You’re building a marketing dashboard that tracks conversion rates. The metric is simple: what percentage of users who view a product page actually make a purchase?

Here’s your code:

| |

Your data quality checks:

- ✅ Conversion rate is between 0% and 100%

- ✅ No null values

- ✅ Page view count is positive

- ✅ Purchase count is positive

- ✅ Numbers are within expected ranges (2-5% conversion is normal)

Everything passes. The metric shows 3.2% conversion rate. Looks reasonable.

But there’s a critical flaw in your logic. You’re counting ALL page views and ALL purchases, regardless of whether they’re from the same user session.

A user might view a product 10 times over a week before finally purchasing. Your calculation counts 10 page views and 1 purchase. But that’s not how conversion works. You need to track unique user journeys.

Even worse, if a user purchases without viewing the product page first (maybe they clicked directly from an email), you’re counting a purchase with no corresponding page view. This inflates your conversion rate.

The data passes all checks. The percentage is within a reasonable range. But your business logic doesn’t match what conversion rate actually means.

What you should have written:

| |

A unit test with realistic user behavior would catch this:

| |

Your data quality checks can’t tell you that your aggregation logic is conceptually wrong. The numbers look fine. But you’re measuring the wrong thing.

⚠️ Real Example #3: Where Data Quality Checks Miss the Bug

The Scenario: Join Logic Error

You’re creating a report showing all customers and their order counts. Simple aggregation. Here’s your Spark code:

| |

Your data quality checks on the output:

- ✅ All customer_ids are valid

- ✅ No null order counts

- ✅ Order counts are positive integers

- ✅ No duplicate customer records

Everything passes. The data looks perfect.

But you used an INNER JOIN. Customers with no orders don’t appear in your report at all. They vanished.

Your stakeholder asks, “Where are all the new sign-ups from last week?” You don’t have an answer. They’re not in the report because they haven’t placed an order yet. The data quality checks can’t catch this. There are no nulls. No invalid IDs. No anomalies in the data that exist. The problem is the data that doesn’t exist.

A unit test with the right test data would catch this:

| |

🔄 Real Example #4: Where Unit Tests Miss the Problem

The Scenario: Upstream Schema Change

Now let’s flip the coin. Here’s where unit tests fail you. You’re building a pipeline that ingests user data from an external API. You parse the JSON response and extract key fields.

| |

Your faithful servant unit test:

| |

Unit test passes. ✅ You deploy.

Two weeks later, the upstream team refactors their API. They change user_id to userId (camelCase). They don’t tell you because “it’s a minor change.”

Your pipeline breaks. Every single run fails with KeyError: 'user_id'.

Your unit test is perfect. Your code logic is correct. But the data structure changed, and your unit test uses mock data that doesn’t reflect reality.

What would catch this:

| |

This data quality check runs on the actual data coming from the API. It catches schema changes immediately. Your code logic was fine. Your unit test was fine. But the real-world data didn’t match your assumptions.

🔄 Real Example #5: Where Unit Tests Miss the Problem

The Scenario: Duplicate Records from Source System

You’re aggregating daily active users from your application logs. Here’s your code:

Your unit test:

| |

Test passes. ✅ Logic is correct.

Then your upstream logging service has a bug. It starts double-logging events. Every user action gets written to the database twice.

Your aggregation logic still works perfectly. It counts unique users. But your counts are now wrong because you’re deduplicating events that shouldn’t exist in the first place.

Your dashboard shows 50,000 daily active users. In reality, half of those are duplicates from the source system. Your business makes decisions based on inflated numbers.

Your code is doing exactly what it’s supposed to do. Your unit test validates that the logic works. But the input data is garbage.

| |

This check runs on the actual data and catches anomalies that your unit test can’t see because your test uses clean, hand-crafted data.

🔄 Real Example #6: Where Unit Tests Miss the Problem

The Scenario: Business Logic Drift

You’re building a discount validation pipeline. Company policy says discounts can’t exceed 50%. Here’s your code:

Test passes. ✅ Your logic matches the business rules. You deploy. Everything works great.

Three months later, marketing launches a massive promotional campaign. Black Friday. They need to offer 70% off to compete.

Your pipeline rejects every single transaction. Orders are getting dropped. Marketing is furious. Sales are lost. Your code is working perfectly according to the rules you coded. Your unit test validates those rules. But the business rules changed, and nobody updated your code.

What would catch this:

| |

This data quality check monitors the behavior in production. It catches when your “correct” code is actually rejecting valid business transactions. Your logic is correct for the old rules. But rules changed.

📏 Drawing the Line: What Each Should Test

Now that you’ve seen both sides fail, let’s draw the line clearly.

Unit Tests Should Verify:

- Your transformation logic is correct: Does my calculation work as intended?

- Edge cases are handled: What happens with nulls, negative values, empty lists, returns?

- Your joins work as intended: Am I using the right join type? Are keys matching correctly?

- Your calculations produce expected results: Does this aggregation logic give me the right answer?

- Conditional logic handles all branches: Do all my if/else paths work correctly?

Data Quality Checks Should Verify:

- Data conforms to expected schema: Are the fields I need actually present?

- Values are within expected ranges: Is this revenue number suspiciously high or low?

- No unexpected nulls or missing data: Are required fields populated?

- Data freshness and completeness: Is this data recent? Are we missing records?

- Relationships between tables are maintained: Do foreign keys match? Are there orphaned records?

- Volume anomalies: Did we suddenly get 10x more records than usual?

Conclusion

Here’s the thing you need to know: Don’t pick one over the other. You need both.

Unit tests don't directly check your data quality, but they're essential for preventing bad code from producing bad data in the first place.

Think of it this way:

Unit tests prevent YOU from creating bad dataData quality checks prevent OTHERS from sending you bad data

Both protect your data quality, just at different stages

The question isn’t “which one should I use?” The question is “am I testing my logic or validating my data?”

If you’re testing logic → write unit tests. If you’re validating data → add quality checks.

Without unit tests, your buggy code will pollute your data warehouse. Without data quality checks, upstream issues will pollute your data warehouse.

You need both layers of defense.

Both are essential. Both catch different problems. Together, they keep your pipelines reliable.